The puzzle that is AI success

Summary

Enterprise AI pilots have a 95% rate of failure. Could it be that people have experiment fatigue and that companies are taking a piecemeal approach to AI innovation? Perhaps the key to AI success lies in placing bets on a companywide AI platform, simplifying the choice architecture for workers, and moving from experiments to delivering value.

I head out on a month’s break from work next week, so this’ll be my last article until I return. As someone responsible for AI transformation at my workplace, a recent, sobering report from MIT grabbed my attention. The report claims that 95% of GenAI pilots at companies are failing. Despite $30-40 billion in enterprise investment into GenAI, most projects yield no measurable P&L impact.

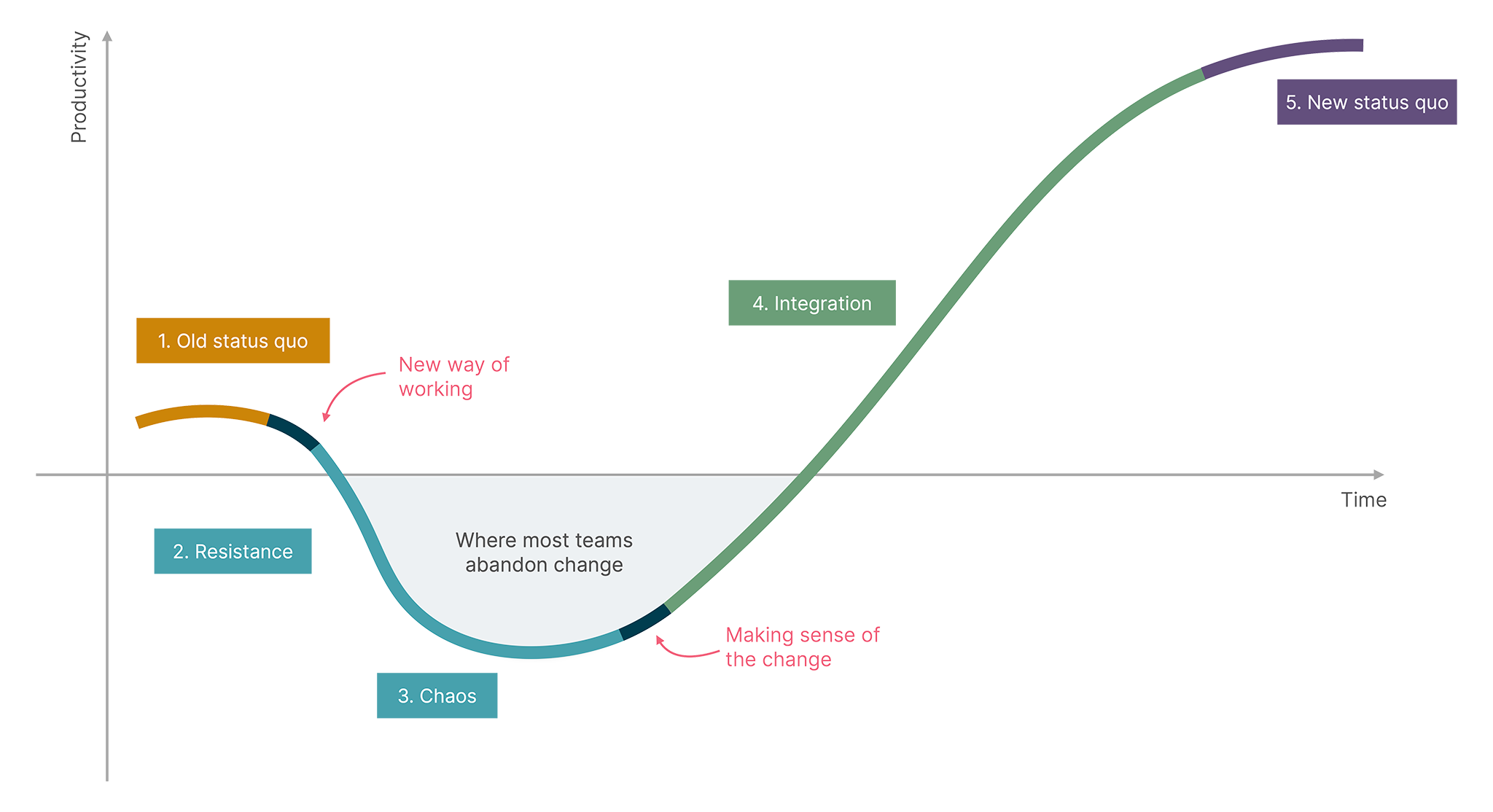

Some of these results are unsurprising. Virginia Satir’s model for change tells us that a shift in the status quo often has phases of resistance and chaos, before teams and organisations can make sense of the change, and arrive at a new status quo that embraces the change.

Change is never a straight line. Chaos and dips in productivity are inevitable.

I’m chuffed about the report because it’s a counterbalancing voice against the constant AI cheerleading. AI can be transformative, but that transformation isn’t magic. The more breathless and impatient we get about seeking transformative change, the less likely we’ll be to achieve transformation.

As I unwind from work and get myself into vacation mode, I want to reflect on some considerations that may influence the success of AI transformation programs at a corporation.

Busy people dislike experiments.

If you work in tech or are in a tech-adjacent company, I’d wager that many of you are experiencing “AI experiment fatigue”. I’d also wager that many of you have tuned out of the experiments, even if you follow them out of academic interest. For many professionals, layoffs in their companies have increased their workloads. They’re busier than ever.

Spare a thought for these busy people. Why would they drop a way of working that’s stood them in good stead for years, and try something experimental? In tech, salary hikes have shrunk. What’s the upside of disrupting oneself? Most people will be right to sit back, watch others thrash around, and then commit when the company makes up its mind about the change.

Experiments are costly

While it’s easy for an individual to move from ChatGPT one day, to Perplexity the next day and to Gemini or Claude another day, organisations aren’t that agile. Making tools available to even a dozen people takes paperwork, compliance, integration, user education, and technical and admin effort — the more integrated the tool, the costlier the experiment.

It’s not as if companies are willing to increase their operational capacity just because they have loads of AI experiments to run. Beyond a point, experiments don’t just paralyse the workers; they paralyse the company at large. Too many experiments also ruin a company’s choice architecture.

Choice can be mind-numbing.

Consider a company that has three major platforms at play:

Office 365

Atlassian Together

Perplexity

Each of these tools comes with its own AI. They have distinct capabilities, but they also have many overlapping AI capabilities. Microsoft’s Copilot can act as an AI assistant, and so can Perplexity or Atlassian Rovo. You can build agents with Copilot and Rovo, and Perplexity too, which natively acts in agentic ways. The choice is unnecessarily mind-boggling for many users, and several people choose to stick with their status quo, instead of making a choice. When managing a company’s AI estate, transformation consultants have several questions to answer:

Which choice are you nudging your users to make?

Which choice yields the highest company-wide impact?

Which choices should you turn off, so you don’t confuse users?

To the greatest extent possible, this choice architecture should be simple and visible throughout the company, and be visible in its AI investments.

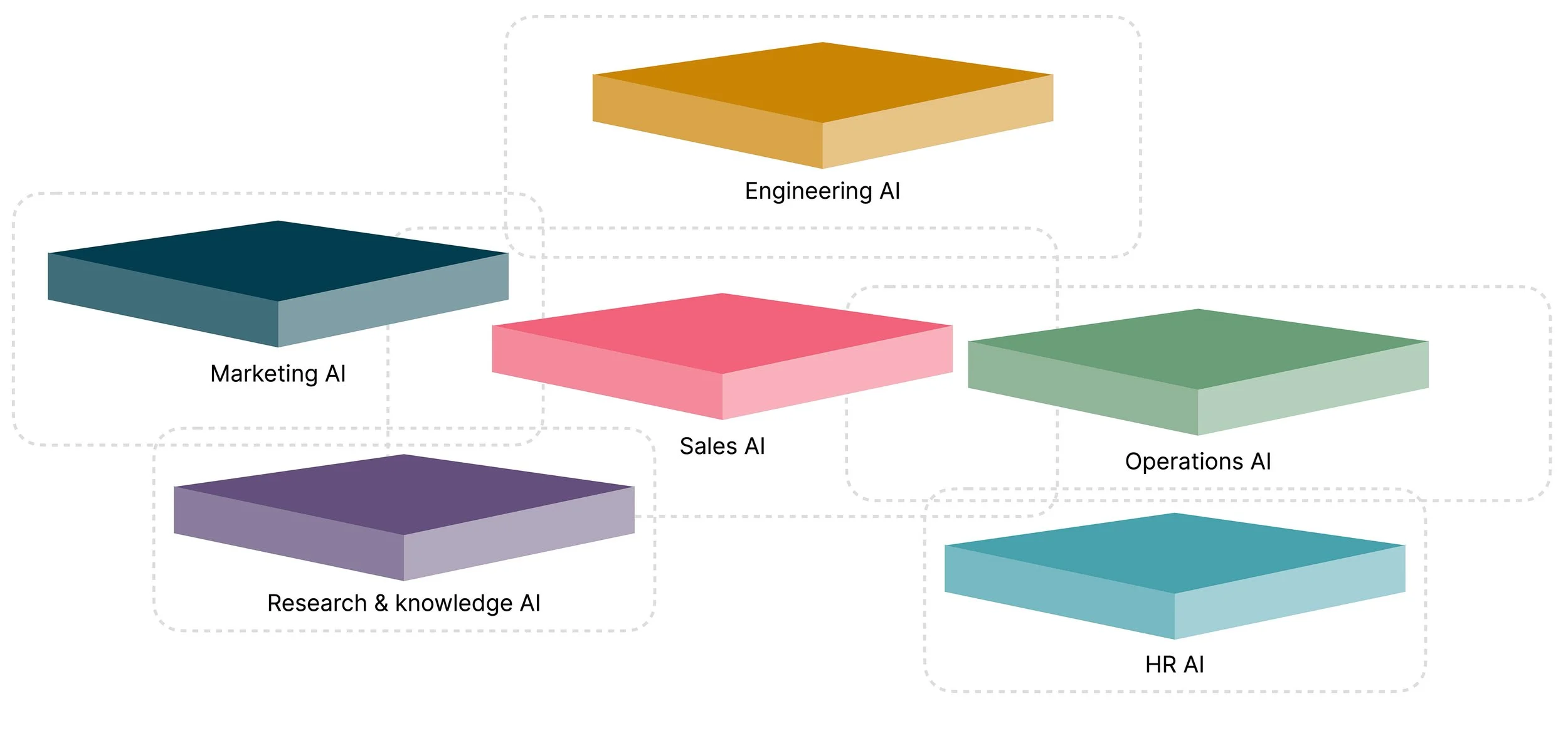

Too many AI systems don’t lead to an ecosystem

Everyone and their cat has an AI system these days. Several of these systems support very narrow use cases. Some do targeted email outreach. Others handle RFP responses. Some build elegant mockups. Others help you curate research that informs these mockups. As companies deal with their own AI FOMO, tool proliferation is inevitable. A reasonable level of tool proliferation is even desirable.

But beyond that reasonable level, multiple tools don’t lead to high-quality, specialised outcomes. Instead, they lead to silos and fragmented user experiences. Companies can’t leverage economies of scale and network effects across the boundaries of fragmented systems, even when they use the same underlying technology.

Too many AI systems, lead to silos, inconsistent UX and fragmented innovation efforts

When you dig deep, you’ll notice that while domain-centric AI applications have value, several domain-centric use cases overlap with each other. Applications that unlock these use cases must often leverage company-wide knowledge and standards, and build on each other in the process. For example, an agent that writes in the company’s corporate voice is applicable for RFP responses, as much as writing software specifications or marketing content.

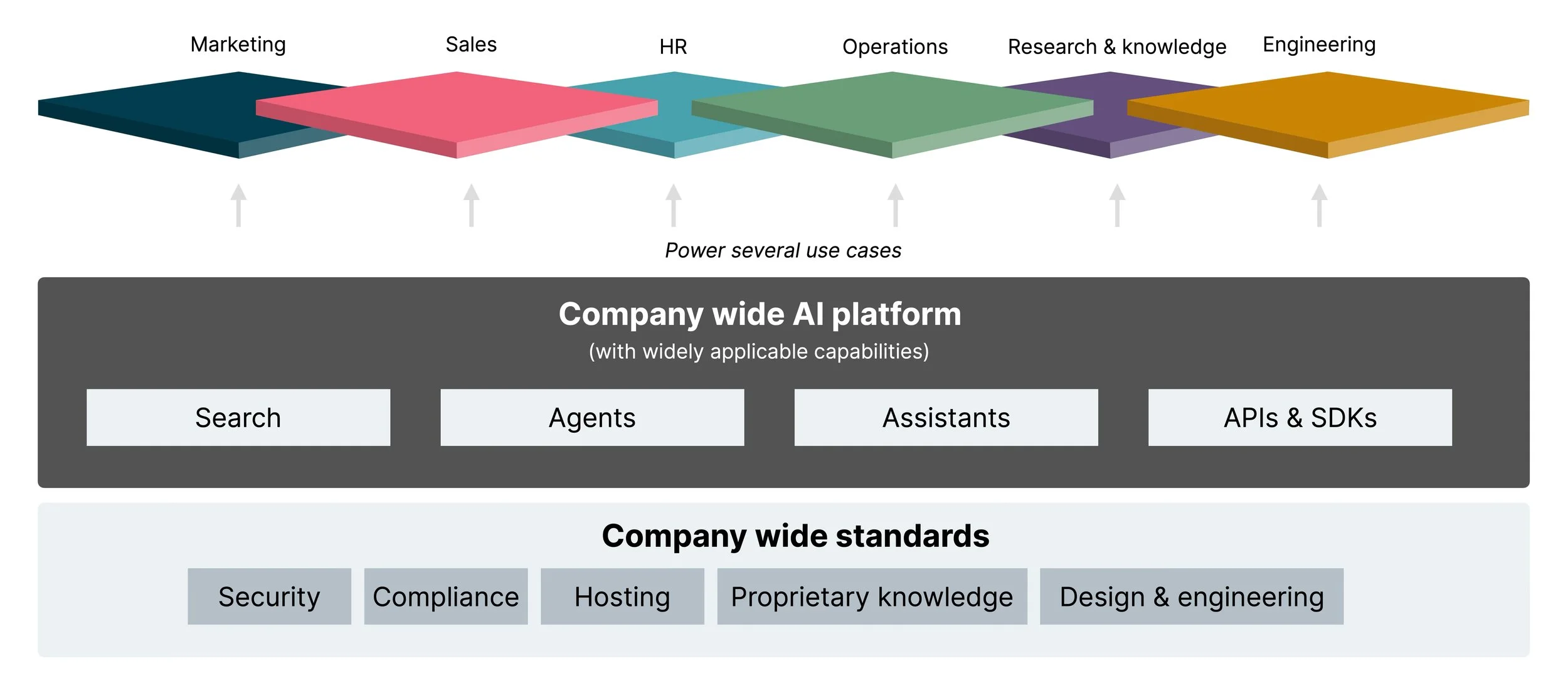

Specialised software has its place. Company-wide AI innovation, however, needs a company-wide AI platform.

Company wide platforms can provide every department and employee a way to power their domain-specific use cases

Is it time to place bets?

In 2025, it’s natural for companies to believe that AI models and tools are advancing at a frightening pace. It’s also natural to assume that getting locked into one platform may limit a corporation’s agility in the future. There may be a sobering truth on the horizon. The jump from GPT-4 to GPT-5 isn’t as big as the jump from GPT-3 to GPT-4 was. It’s fair to ask, as Cal Newport and many others do, if model capability will soon hit a plateau. Change may not be as frenetic as we believe it to be.

Even if Newport, Marcus and other AI bears are wrong, there’s always a competitive window to make the most of new technology. The innovators and early adopters take most risks with new technology, but the early majority gains the most upside. A company that adopts new technology late in the cycle experiences plateaued benefits.

Being late to the AI party could lead to a poorer competitive advantage

In my view, it’s time for companies to stop hedging and place their bets. The idea should be to pick innovative platforms that best serve your use cases and back them to keep innovating. Don’t lock yourself to a single vendor for all time, but at least decide on a strategic platform architecture.

Of course, every company wants to preserve the optionality to move to another platform. Preserving specifications is one way to maintain that optionality - an idea that Sean Grove explains in a recent talk. The question is: which platform(s) will afford you such optionality?

Embrace the consumerisation of AI.

When we step away from the white noise of AI cheerleading, a few facts are abundantly clear.

ChatGPT is still the most widely used generative AI consumer tool.

Q&A and understanding complex topics are still the most common use cases for AI.

Many knowledge workers use AI tools in their personal lives.

The internet exposes workers to high-quality AI user experiences.

Often, AI at the workplace doesn’t match the user experience of consumer AI.

The takeaway? Workers use AI whether or not the company provides them with the tools. “Shadow AI” is real. Last year, Microsoft reported that 78-80% workers are bringing their own AI tools to work (BYOAI). Why wouldn’t they? If AI at the workplace lags personal-use AI, people will do what they can to boost their effectiveness at work. Individual productivity isn’t trivial!

But the consumerisation of AI is a blessing in disguise. Knowledge workers are getting quite sophisticated in their use of AI, and AI patterns are becoming ubiquitous. With time, AI training and change management costs will only taper down. The key to success will be in helping employees unlock their self-taught skills on company-wide platforms that bring the same AI patterns to work that the workers have seen on the consumer web.

So, as 2025 rolls into its final months, what might the formula for AI success involve? I reckon it’s about reducing uncertainty and bold commitments. CIOs and CTOs have a few questions to ponder over.

How can their organisation scale from experiments to rollouts?

How can they defragment their enterprise AI ecosystem?

How can they design a simple AI choice architecture for their employees?

What’s the company-wide AI platform that leverages ubiquitous patterns, so every employee can be an AI innovator?

How do they foster a network of AI builders in the company, who can reuse each other's patterns, to solve problems for their departments?

AI is so new that anyone who pretends to have the answers to these questions is probably a charlatan. Every company will discover context-specific answers to these questions. Likely, some answers won’t be obvious. The idea, however, is to keep asking!